Custom function decorators with TurboGears 2

I am exposing some library functions using a TurboGears2 controller (see

web-api-with-turbogears2). It turns out that some functions return a dict,

some a list, some a string, and TurboGears 2 only allows JSON serialisation for

dicts.

A simple work-around for this is to wrap the function result into a dict,

something like this:

@expose("json")

@validate(validator_dispatcher, error_handler=api_validation_error)

def list_colours(self, filter=None, productID=None, maxResults=100, **kw):

# Call API

res = self.engine.list_colours(filter, productID, maxResults)

# Return result

return dict(r=res)

It would be nice, however, to have an

@webapi() decorator that

automatically wraps the function result with the dict:

def webapi(func):

def dict_wrap(*args, **kw):

return dict(r=func(*args, **kw))

return dict_wrap

# ...in the controller...

@expose("json")

@validate(validator_dispatcher, error_handler=api_validation_error)

@webapi

def list_colours(self, filter=None, productID=None, maxResults=100, **kw):

# Call API

res = self.engine.list_colours(filter, productID, maxResults)

# Return result

return res

This works, as long as @webapi appears

last in the list of decorators.

This is because if it appears last it will be the first to wrap the function,

and so it will not interfere with the

tg.decorators machinery.

Would it be possible to create a decorator that can be put anywhere among the

decorator list? Yes, it is possible but tricky, and it gives me the feeling

that it may break in any future version of TurboGears:

class webapi(object):

def __call__(self, func):

def dict_wrap(*args, **kw):

return dict(r=func(*args, **kw))

# Migrate the decoration attribute to our new function

if hasattr(func, 'decoration'):

dict_wrap.decoration = func.decoration

dict_wrap.decoration.controller = dict_wrap

delattr(func, 'decoration')

return dict_wrap

# ...in the controller...

@expose("json")

@validate(validator_dispatcher, error_handler=api_validation_error)

@webapi

def list_colours(self, filter=None, productID=None, maxResults=100, **kw):

# Call API

res = self.engine.list_colours(filter, productID, maxResults)

# Return result

return res

As a convenience, TurboGears 2 offers, in the

decorators module, a way to

build decorator "hooks":

class before_validate(_hook_decorator):

'''A list of callables to be run before validation is performed'''

hook_name = 'before_validate'

class before_call(_hook_decorator):

'''A list of callables to be run before the controller method is called'''

hook_name = 'before_call'

class before_render(_hook_decorator):

'''A list of callables to be run before the template is rendered'''

hook_name = 'before_render'

class after_render(_hook_decorator):

'''A list of callables to be run after the template is rendered.

Will be run before it is returned returned up the WSGI stack'''

hook_name = 'after_render'

The way these are invoked can be found in the

_perform_call function in

tg/controllers.py.

To show an example use of those hooks, let's add a some polygen wisdom to every

data structure we return:

class wisdom(decorators.before_render):

def __init__(self, grammar):

super(wisdom, self).__init__(self.add_wisdom)

self.grammar = grammar

def add_wisdom(self, remainder, params, output):

from subprocess import Popen, PIPE

output["wisdom"] = Popen(["polyrun", self.grammar], stdout=PIPE).communicate()[0]

# ...in the controller...

@wisdom("genius")

@expose("json")

@validate(validator_dispatcher, error_handler=api_validation_error)

def list_colours(self, filter=None, productID=None, maxResults=100, **kw):

# Call API

res = self.engine.list_colours(filter, productID, maxResults)

# Return result

return res

These hooks cannot however be used for what I need, that is, to wrap the result

inside a dict. The reason is because they are called in this way:

controller.decoration.run_hooks(

'before_render', remainder, params, output)

and not in this way:

output = controller.decoration.run_hooks(

'before_render', remainder, params, output)

So it is possible to modify the output (if it is a mutable structure) but not

to exchange it with something else.

Can we do even better? Sure we can. We can assimilate

@expose and

@validate

inside

@webapi to avoid repeating those same many decorator lines over and

over again:

class webapi(object):

def __init__(self, error_handler = None):

self.error_handler = error_handler

def __call__(self, func):

def dict_wrap(*args, **kw):

return dict(r=func(*args, **kw))

res = expose("json")(dict_wrap)

res = validate(validator_dispatcher, error_handler=self.error_handler)(res)

return res

# ...in the controller...

@expose("json")

def api_validation_error(self, **kw):

pylons.response.status = "400 Error"

return dict(e="validation error on input fields", form_errors=pylons.c.form_errors)

@webapi(error_handler=api_validation_error)

def list_colours(self, filter=None, productID=None, maxResults=100, **kw):

# Call API

res = self.engine.list_colours(filter, productID, maxResults)

# Return result

return res

This got rid of

@expose and

@validate, and provides

almost all the

default values that I need. Unfortunately I could not find out how to access

api_validation_error from the decorator so that I can pass it to the

validator, therefore I remain with the inconvenience of having to explicitly

pass it every time.

This is both a release announcement for the next installment of The

MirBSD Korn Shell, mksh R40b,

and a follow-up to Sune s

article about small tools of various degrees of usefulness.

I hope I don t need to say too much about the first part; mksh(1)

is packaged in a gazillion of operating environments (dear Planet

readers, that of course includes Debian, which occasionally

gets a development snapshot; I ll wait uploading R40c until that two

month fixed gcc bug will finally find its way into the

packages for armel and armhf. Ah, we re getting Arch Linux (after

years) to include mksh now. (Probably because they couldn t stand

the teasing that Arch Hurd included it one day after having

been told about its existence, wondering why it built without needing

patches on Hurd ) MSYS is a supposedly supported target now, people

are working on WinAPI and DJGPP in their spare time, and Cygwin and

Debian packagers have deprecated pdksh in favour of mksh (thanks!).

So, everything looking well on that front.

I ve started a

collection of shell snippets some time ago, where most of those

small things of mine ends up. Even stuff I write at work we re an

Open Source company and can generally publish under (currently) AGPLv3

or (if extending existing code) that code s licence. I chose git as

SCM in that FusionForge instance so that people would hopefully use it

and contribute to it without fear, as it s hosted on my current money

source s servers. (Can just clone it.) Feel free to register and ask

for membership, to extend it (only if your shell-fu is up to the task,

KNOPPIX-style scripts would be a bad style(9) example as the primary

goal of the project is to give good examples to people who learn shell

coding by looking at other peoples code).

Maybe you like my editor, too? At

OpenRheinRuhr, the Atari people sure liked it as it uses WordStar

like key combinations, standardised across a lot of platforms and

vendors (DR DOS Editor, Turbo Pascal, Borland C++ for Windows, )

ObPromise: a posting to raise the level of ferrophility

on the Planet aggregators this wlog reaches (got pix)

This is both a release announcement for the next installment of The

MirBSD Korn Shell, mksh R40b,

and a follow-up to Sune s

article about small tools of various degrees of usefulness.

I hope I don t need to say too much about the first part; mksh(1)

is packaged in a gazillion of operating environments (dear Planet

readers, that of course includes Debian, which occasionally

gets a development snapshot; I ll wait uploading R40c until that two

month fixed gcc bug will finally find its way into the

packages for armel and armhf. Ah, we re getting Arch Linux (after

years) to include mksh now. (Probably because they couldn t stand

the teasing that Arch Hurd included it one day after having

been told about its existence, wondering why it built without needing

patches on Hurd ) MSYS is a supposedly supported target now, people

are working on WinAPI and DJGPP in their spare time, and Cygwin and

Debian packagers have deprecated pdksh in favour of mksh (thanks!).

So, everything looking well on that front.

I ve started a

collection of shell snippets some time ago, where most of those

small things of mine ends up. Even stuff I write at work we re an

Open Source company and can generally publish under (currently) AGPLv3

or (if extending existing code) that code s licence. I chose git as

SCM in that FusionForge instance so that people would hopefully use it

and contribute to it without fear, as it s hosted on my current money

source s servers. (Can just clone it.) Feel free to register and ask

for membership, to extend it (only if your shell-fu is up to the task,

KNOPPIX-style scripts would be a bad style(9) example as the primary

goal of the project is to give good examples to people who learn shell

coding by looking at other peoples code).

Maybe you like my editor, too? At

OpenRheinRuhr, the Atari people sure liked it as it uses WordStar

like key combinations, standardised across a lot of platforms and

vendors (DR DOS Editor, Turbo Pascal, Borland C++ for Windows, )

ObPromise: a posting to raise the level of ferrophility

on the Planet aggregators this wlog reaches (got pix)

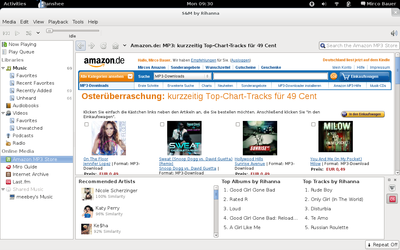

Last night I wanted to buy

Last night I wanted to buy

After recent switch of this blog to Django, I've also planned to switch rest of my website to use same engine. Most of the code is already written, however I've found some forgotten parts, which nobody used for really a long time.

As most of the things there is free software, I don't like idea removing it completely, however it is quite unlikely that somebody will want to use these ancient things. Especially when it quite lacks documentation and I really forgot about that (most of them being Turbo Pascal things for DOS, Delphi components for Windows and so on). Anyway it will probably live only on

After recent switch of this blog to Django, I've also planned to switch rest of my website to use same engine. Most of the code is already written, however I've found some forgotten parts, which nobody used for really a long time.

As most of the things there is free software, I don't like idea removing it completely, however it is quite unlikely that somebody will want to use these ancient things. Especially when it quite lacks documentation and I really forgot about that (most of them being Turbo Pascal things for DOS, Delphi components for Windows and so on). Anyway it will probably live only on  I'm a terrible hoarder. I hang onto old stuff because I think it might be

fun to have a look at again later, when I've got nothing to do. The problem

is, I never have nothing to do, or when I do, I never think to go through

the stuff I've hoarded. As time goes by, the technology becomes more and

more obsolete to the point where it becomes impractical to look at it.

Today's example: the 3.5" floppy disk. I've got a disk holder thingy with

floppies in it dating back to the mid-nineties and earlier. Stuff from high

school, which I thought might be a good for a giggle to look at again some

time.

In the spirit of

I'm a terrible hoarder. I hang onto old stuff because I think it might be

fun to have a look at again later, when I've got nothing to do. The problem

is, I never have nothing to do, or when I do, I never think to go through

the stuff I've hoarded. As time goes by, the technology becomes more and

more obsolete to the point where it becomes impractical to look at it.

Today's example: the 3.5" floppy disk. I've got a disk holder thingy with

floppies in it dating back to the mid-nineties and earlier. Stuff from high

school, which I thought might be a good for a giggle to look at again some

time.

In the spirit of  H&R Block is like a KFC for your chicken.

No, not really.

H&R Block is like a KFC for your chicken.

No, not really.

This has robbed me of several days of my life, so I want to bring Google juice this this problem.

IF you have a Pylons or TurboGears application or anything else that uses the fantastic EvalException WSGI middleware for web debugging of you web program and have the following symptom:

* on a crash the traceback page shows up, but it has no css style and no images

This has robbed me of several days of my life, so I want to bring Google juice this this problem.

IF you have a Pylons or TurboGears application or anything else that uses the fantastic EvalException WSGI middleware for web debugging of you web program and have the following symptom:

* on a crash the traceback page shows up, but it has no css style and no images Site was down for almost a week, due to my

Site was down for almost a week, due to my  ) will come to the rescue.

) will come to the rescue.

All ARM based QNAP machines can turn on automatically when power is

applied if the device was not powered down correctly. This is helpful

when your power goes down. Follow the instructions below if you want to

turn automatic power on using Debian on a QNAP TS-109, TS-119, TS-209,

TS-219, TS-409 or TS-409U.

Edit the file /etc/apt/sources.list and add the following line:

All ARM based QNAP machines can turn on automatically when power is

applied if the device was not powered down correctly. This is helpful

when your power goes down. Follow the instructions below if you want to

turn automatic power on using Debian on a QNAP TS-109, TS-119, TS-209,

TS-219, TS-409 or TS-409U.

Edit the file /etc/apt/sources.list and add the following line: